Sunday, November 2, 2014

Trying Out Property-Based Testing in Clojure

Sunday, October 19, 2014

Trying Out Reference Cursors

As an example of where this might be useful, imagine your data looks like this:

(defonce app-state (atom {:messages [{:sender "Paul" :text "Let It Be"}]

:members [{:name "John" :instrument :guitar}

{:name "George" :instrument :guitar}

{:name "Paul" :instrument :bass}

{:name "Ringo" :instrument :drums}

{:name "Clarence" :instrument :saxophone}]}))

And your UI is structured like this:

(defn main-panel [app owner]

(reify

om/IRender

(render [this]

(dom/div nil

(dom/div #js {:className "row"}

(om/build msgs-panel (:messages app)))

(dom/div #js {:className "row"}

(om/build user-panel (nth (:members app) 0))

(om/build user-panel (nth (:members app) 1))

(om/build user-panel (nth (:members app) 2))

(om/build user-panel (nth (:members app) 3)))))))

The messages panel knows about the messages, and each user panel knows only about the user it represents.

What happens if you want to display each user’s messages in the user panels? You could pass the entire app-state to each panel, but then you have to find another way to indicate which particular user each panel represents.

Reference cursors allow exposing a data hierarchy independently from the ui structure. In this application, we expose the messages:

(defn messages []

(om/ref-cursor (:messages (om/root-cursor app-state))))

We can then observe this cursor in the user panels, as in the let binding here:

(defn user-panel [app owner]

(reify

om/IRender

(render [_]

(let [xs (->> (om/observe owner (messages))

(filter #(= (:sender %) (:name app)))

reverse)]

(dom/div #js {:className "col-xs-3 panel panel-warning msg-log"}

(dom/h4 #js {:className "panel-heading"}

(str (:name app) " " (:instrument app)))

(dom/div #js {:className "panel-body"}

(apply dom/ul #js {:className "list-group"}

(map #(dom/li #js {:className "list-group-item"}

(:text %))

(take 3 xs)))))))))

Even in this small application, reference cursors make for a much cleaner solution. For larger applications, they are going to make a huge difference.

You can find the code for this application on github and you can see the application running here.

Monday, July 21, 2014

Machine Learning in Clojure - part 2

My last post was about doing linear regression with 1 variable. This post will show that the same process works for multiple variables, and then explain why we represent the problem with matrices.

The only code in this post is calling the functions introduced in the last one. I also use the same examples, so post this will make a lot more sense if you read that one first.

For reference, here is the linear regression function:

(defn linear-regression [x Y a i]

(let [m (first (cl/size Y))

X (add-ones x)]

(loop [Theta (cl/zeros 1 (second (cl/size X))) i i]

(if (zero? i)

Theta

(let [ans (cl/* X (cl/t Theta))

diffs (cl/- ans Y)

dx (cl/* (cl/t diffs) X)

adjust-x (cl/* dx (/ a m))]

(recur (cl/- Theta adjust-x)

(dec i)))))))

Because the regression function works with matrices, it does not need any changes to run a regression over multiple variables.

Some Examples

In the English Premier League, a team gets 3 points for a win, and 1 point for a draw. Trying to find a relationship between wins and points gets close to the answer.(->> (get-matrices [:win] :pts)

reg-epl

(print-results "wins->points"))

** wins->points **

A 1x2 matrix

-------------

1.24e+01 2.82e+00

When we add a second variable, the number of draws, we get close enough to ascribe the difference to rounding error.

(->> (get-matrices [:win :draw] :pts)

reg-epl

(print-results "wins+draws->points"))

** wins+draws->points **

A 1x3 matrix

-------------

-2.72e-01 3.01e+00 1.01e+00

In the last post, I asserted that scoring goals was the key to success in soccer.

(->> (get-matrices [:for] :pts)

reg-epl

(print-results "for->points"))

** for->points **

A 1x2 matrix

-------------

2.73e+00 9.81e-01

If you saw Costa Rica in the World Cup, you know that defense counts for a lot too. Looking at both goals for and against can give a broader picture.

(->> (get-matrices [:for :against] :pts)

reg-epl

(print-results "for-against->pts"))

** for-against->pts **

A 1x3 matrix

-------------

3.83e+01 7.66e-01 -4.97e-01

The league tables contain 20 fields of data, and the code works for any number of variables. Will adding more features (variables) make for a better model?

We can expand the model to include whether the goals were scored at home or away.

(->> (get-matrices [:for-h :for-a :against-h :against-a] :pts)

reg-epl

(print-results "forh-fora-againsth-againsta->pts"))

** forh-fora-againsth-againsta->pts **

A 1x5 matrix

-------------

3.81e+01 7.22e-01 8.26e-01 -5.99e-01 -4.17e-01

The statistical relationship we have found suggests that that goals scored on the road are with .1 points more than those scored at home. The difference in goals allowed is even greater; they cost .6 points at home and only .4 on the road.

Wins and draws are worth the same number of points, no matter where the game takes place, so what is going on?

In many sports there is a “home field advantage”, and this is certainly true in soccer. A team that is strong on the road is probably a really strong team, so the relationship we have found may indeed be accurate.

Adding more features indiscriminately can lead to confusion.

(->> (get-matrices [:for :against :played :gd :for-h :for-a] :pts)

reg-epl

(map *)

(print-results "kitchen sink”))

** kitchen sink **

(0.03515239958218979 0.17500425607459014 -0.22696465757628984 1.3357911841232217 0.4019689136508527 0.014497060396707949 0.1605071956778842)

When I printed out this result the first time, the parameter representing the number of games played displayed as a decimal point with no digit before or after. Multiplying each term by 1 got the numbers to appear. Weird.

The :gd stands for “goal difference” it is the difference between the number of goals that a team scores and the number they give up. Because we are also pulling for and against, this is a redundant piece of information. Pulling home and away goals for makes the combined goals-for column redundant as well.

All of the teams in the sample played the same number of games, so that variable should not have influenced the model. Looking at the values, our model says that playing a game is worth 1.3 points, and this is more important than all of the other factors combined. Adding that piece of data removed information.

Let’s look at one more model with redundant data. Lets look at goals for, against and the goal difference, which is just the difference of the two.

(->> (get-matrices [:for :against :gd] :pts)

reg-epl

(print-results "for-against-gd->pts"))

** for-against-gd->pts **

A 1x4 matrix

-------------

3.83e+01 3.45e-01 -7.57e-02 4.21e-01

points = 38.3 + 0.345 * goals-for - 0.0757 * goals-against + 0.421 * goal-difference

The first term, Theta[0] is right around 38. If a team neither scores nor allows any goals during a season, they will draw all of their matches, earning 38 points. I didn’t notice that the leading term was 38 in all of the cases that included both goals for and against until I wrote this model without the exponents.

Is this model better or worse than the one that looks at goals for and goals against, without goal difference. I can’t decide.

Why Matrices?

Each of our training examples have a series of X values, and one corresponding Y value. Our dataset contains 380 examples (20 teams * 19 seasons).Our process is to make a guess as to the proper value for each parameter to multiply the X values by and compare the results in each case to the Y value. We use the differences between the product of our guesses, and the real life values to improve our guesses.

This could be done with a loop. With m examples and n features we could do something like

for i = 1 to m

guess = 0

for j = 1 to n

guess = guess + X[i, j] * Theta[j]

end for j

difference[i] = guess - Y

end for i

We would need another loop to calculate the new values for Theta.

Matrices have operations defined that replace the above loops. When we multiply the X matrix by the Theta vector, for each row of X, we multiply each element by the corresponding element in Theta, and add the products together to get the first element of the result.

Matrix subtraction requires two matrices that are the same size. The result of subtraction is a new matrix that is the same size, where each element is the difference of the corresponding elements in the original matrices.

Using these two operations, we can replace the loops above with

Guess = X * Theta

Difference = Guess - Y

Clearly the notation is shorter. The other advantage is that there are matrix libraries that are able to do these operations much more efficiently than can be done with loops.

There are two more operations that our needed in the linear regression calculations. One is multiplying matrices by a single number, called a scalar. When multiplying a matrix by a number, multiply each element by that number. [1 2 3] * 3 = [3 6 9].

The other operation we perform is called a transpose. Transposing a matrix turns all of its rows into columns, and its columns into rows. In our examples, the size of X is m by n, and the size of Theta is 1 x n. We don’t have any way to multiply an m by n matrix and a 1 by n matrix, but we can multiply a m by n matrix and an n by 1 matrix. The product will be an m by 1 matrix.

In the regression function there are a couple of transposes to make the dimensions line up. That is the meaning of the cl/t expression. cl is an alias for the Clatrix matrix library.

Even though we replaced a couple of calculations that could have been done in loops with matrix calculations, we are still performing these calculations in a series of iterations. There is a technique for calculating linear regression without the iterative process called Normal Equation.

I am not going to discuss normal equation for two reasons. First, I don’t understand the mathematics. Second the process we use, Gradient Descent, can be used with other types of machine learning techniques, and normal equation cannot.

Saturday, July 5, 2014

Linear Regression in Clojure, Part I

Recently, a new book has come out, Clojure for Machine Learning. I am only a couple of chapters in, but it has already been a good help to me. I do agree with this review that the book is neither a good first Clojure book, or a good first machine learning resource, but it does join the two topics well.

Linear Regression

The place to start with machine learning is Linear Regression with one variable. The goal is to come up with an equation in the familiar form of y = mx + b, where x is the value you know and y is the value you are trying to predict.

Linear regression is a supervised learning technique. This means that for each of the examples used to create the model the correct answer is known.

We will use slightly different notation to represent the function we are trying to find. In place of b we will put Theta[0] and in place of m we will put Theta[1]. The reason for this, is that we are going to be using a generalized technique that will work for any number of variables, and the result of our model will be a vector called Theta.

Even though our technique will work for multiple variables, we will focus on predicting based on a single variable. This is conceptually a little simpler, but more importantly it allows us to plot the input data and our results, so we can see what we are doing.

The Question

A number of years ago I read the book Moneyball, which is about the application of statistics to baseball. One of the claims in the book is that the best predictor for the number of games a baseball team wins in a season is the number of runs they score that season. To improve their results, teams should focus on strategies that maximize runs.

The question I want to answer is whether the same is true in soccer: Are the number of points a team earns in a season correlated with the number of goals that they score. For any that don’t know, a soccer team is awarded 3 points for a win and 1 point for a tie.

The importance of goals is a relevant question for a Manchester United fan. At the end of the 2012-13 season, head coach Sir Alex Ferguson retired after winning his 13th Premier League title. He was replaced by David Moyes. Under Moyes the offense which had been so potent the year before looked clumsy. Also, the team seemed unlucky, giving up goals late in games, turning wins into draws and draws into defeats. The team that finished 1st the year before finished 7th in 2013-14. Was the problem a bad strategy, or bad luck?

The Data

I have downloaded the league tables for the last 19 years of the English Premier League from stato.com. There have actually been 22 seasons in the Premier League, but in the first 3 seasons each team played 42 games, vs 38 games for the last 19 seasons, and I opted for consistency over quantity.

I actually want to run 3 regressions, first one on a case where I am sure there is a correlation, then on a case where I am sure there is not, and then finally to determine whether a correlation exists between goals and points.

There should be a high correlation between the number of wins a team has and their number of points. Since every team plays the same number of games, there should be no correlation between the number of games played and a teams position in the standings.

The Process

We will use a technique called gradient descent to find the equation we want to use for our predictions. We will start with an arbitrary value for Theta[0] and Theta[1]; setting both to 0. We will multiply each x value by Theta[1] and add Theta[0], and compare that result to the corresponding value of Y. We will use the differences between Y and the results of Theata * X to calculate new values for Theta, and repeat the process.

One way of measuring the quality of the prediction is with a cost function that measures the mean square error of the predictions.

1/2m * sum(h(x[i]) - y[i])^2

Where m is the number of test cases we are evaluating, and h(x[i]) is the predicted value for a test case i. We will not use the cost function directly, but its derivative is used in improving our predictions of Theta as follows:

Theta[0] = Theta[0] - alpha * 1/m * sum(h(x[i]) - y([i])

Theta[1] = Theta[1] - alpha * 1/m * sum((h(x[i]) - y([i]) * x[i])

We have added one more symbol here. alpha is called the learning rate. The learning rate determines how much we modify Theta each iteration. If alpha is set too high, the process will oscillate between Thetas that are too low and two high and the process will never converge. When alpha is set lower than necessary, extra iterations are necessary to converge.

I need to mention again that this methodology and these equations come directly from Professor Ng’s machine learning course on Coursera that I linked above. He spends over an hour on linear regression with one variable, and if you want more information that is the place to go.

The Code

The actual calculations we are going to do are operations on matrices. When we multiply the matrix X by the matrix Theta, we obtain a matrix of predictions that can be compared element by element with the matrix Y. The same results could be obtained by looping over each test case, but expressing the computations as matrix operations yields simpler equations, shorter code and better performance.

I used the clatrix matrix library for the calculations.

One other thing to note, in the equations above, Theta[0] is treated differently than Theta[1], it is not multiplied by any x terms, either in the predictions or in the adjustments after the predictions. If we add an additional column to our X matrix, an X[0], and make all of the values in this column 1, we then no longer have to make a distinction between Theta[0] and Theta[1].

(defn add-ones "Add an X[0] column of all 1's to use with Theta[0]"

[x]

(let [width (first (cl/size x))

new-row (vec (repeat width 1))

new-mat (cl/matrix new-row)]

(cl/hstack new-mat x)))

(defn linear-regression [x Y a i]

(let [m (first (cl/size Y))

X (add-ones x)]

(loop [Theta (cl/zeros 1 (second (cl/size X))) i i]

(if (zero? i)

Theta

(let [ans (cl/* X (cl/t Theta))

diffs (cl/- ans Y)

dx (cl/* (cl/t diffs) X)

adjust-x (cl/* dx (/ a m))]

(recur (cl/- Theta adjust-x)

(dec i)))))))

The linear-regression function takes as parameters the X and Y values that we use for training, the learning rate and the number of iterations to perform. We add a column of ones to the passed in X values. We initialize the Theta vector, setting all the values to 0.

At this point X is a matrix of 380 rows and 2 columns. Theta is a matrix of 1 row and 2 columns. If we take the transpose of Theta (turn the rows into columns, and columns into rows) we get a new matrix, Theta’ which has 2 rows and 1 columns. Multiplying the matrix X with Theta’ yields a matrix of 380x1 containing all of the predictions, and the same size as Y.

Taking the difference between the calculated answers and our known values yields a 380x1 matrix. We transpose this matrix, making it 1x380, and multiply it by our 380x2 X matrix, yielding a 1x2 matrix. We multiply each element in this matrix by a and divide by m, ending up with a 1x2 matrix which has the amounts we want to subtract from Theta, which is also a 1x2 matrix. All that is left to do is recur with the new values for Theta.

The Results

Since I am going to be performing the same operations on three different data sets, I wrote a couple of helper functions. plot-it uses Incanter to display a scatter plot of the data. reg-epl calls the linear-regression function specifying a learning rate of .0001 and 1000000 iterations. I also have a get-matrices function, which downloads the data and creates the X and Y matrices for the specified fields.

(def wins (get-matrices [:win] :pts))

(plot-it wins)

(def win-theta (reg-epl wins))

(println "Wins-points: " win-theta)

Yields this graph

and these results

Wins-points: A 1x2 matrix

-------------

1.24e+01 2.82e+00

The relationship between wins and points is obvious in the graph. The equation we developed estimates wins as being worth 2.82 points, instead of the correct 3. This is because it had no way to account for draws, and use a high intercept to get those extra points in there.

A team with 0 wins would be expected to have 12.4 points. A team with 10 wins would have 12.4 + 2.82 * 10 = 40.6 points. A team with 20 wins would have 12.4 + 2.82 * 25 =

82.9 points.

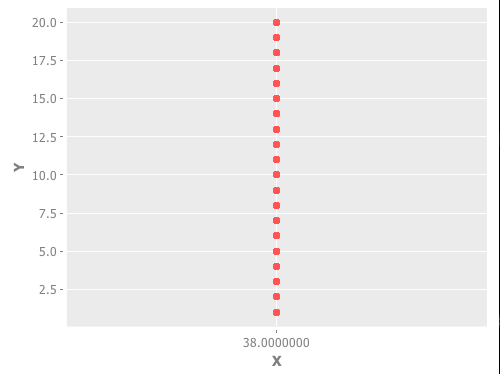

(def played (get-matrices [:played] :rank))

(plot-it played)

(def played-theta (reg-epl played))

(println "played-rank: " played-theta)

(println "expected finish:" (+ (first played-theta)

(* 38 (second played-theta))))

Playing 38 games gives you an equal chance of having a finishing position anywhere between 1 and 20. The graph gives a good illustration of what no-correlation looks like.

If we use the terms in Theta to find the expected finishing position for a team playing 38 games, we find exactly what we expect, 10.5.

played-rank: A 1x2 matrix

-------------

7.27e-03 2.76e-01

expected finish: 10.499999999999996

Ok, now that we have seen what it looks like when we have a strong correlation, and no correlation, is there a correlation between goals and points?

(def goals (get-matrices [:for] :pts))

(plot-it goals)

(def goal-theta (reg-epl goals))

(def goal-lm (find-lm goals))

(println "goals-points: " goal-theta)

(println "goals-points (incanter): " goal-lm)

Looking at the graph, while not quite as sharp as the goals-points graph, it definitely looks like scoring more goals earns you more points.

To double check my function, I also used Incanter’s linear-model function to also generate an intercept and slope. (And yes, I am relieved that they match).

goals-points: A 1x2 matrix

-------------

2.73e+00 9.81e-01

goals-points (incanter): [2.7320304686089685 0.9806635460888629]

We can superimpose the line from our regression formula on the graph, to see how they fit together.

(def goal-plot (scatter-plot (first goals) (second goals)))

(defn plot-fn [x]

(+ (* (second goal-theta) x) (first goal-theta)))

(def plot-with-regression (add-function goal-plot plot-fn 0 100))

(view plot-with-regression)

The Answer

We can calculate how many points we would expect the team to earn based on their 86 goals in 2012-13 and 64 goals in 2013-14.

(println "86 goals = " (+ (first goal-theta)

(* (second goal-theta) 86)))

(println "64 goals = " (+ (first goal-theta)

(* (second goal-theta) 64)))

86 goals = 87.07011197597255

64 goals = 65.49481001604704

In the last year under Sir Alex, Manchester United earned 89 points, 2 more than the formula predicts. In their year under David Moyes, they earned 64 points, 1.5 less than the formula predicts.

Of the 25 point decline in Manchester United’s results, 21.5 points can be attributed to the failure of the offense under Moyes, and 3.5 points can be attributed to bad luck or other factors.

Manchester United’s attacking style isn’t just fun to watch, it is also the reason they win so much. Hopefully the team’s owners have learned that lesson, and will stick to attack minded managers in the future.

You can find all of the code for the project on github.

Thursday, May 22, 2014

Chess Clocks with ClojureScript

Recently I watched a talk by David Nolen about Clojure's core.async library. For anyone who wanting to learn about core.async, this talk is a great place to start.

In the talk David demonstrated a process function that used one channel as a control, to turn off and on output on another channel. He did not have time to go into detail about how it worked, so I wanted to build something with it to make sure I understood it.

A pair of chess clocks is a system that has two processes that are turned off and on by pushing buttons. I used Om for the rendering. You can see the complete code on github.

The operation of each clock is represented by a function called counter. It takes an om cursor with the time it will be counting and a control channel which turns the counter off and on.

(defn counter [app control]

(go

(loop [clock-state (cycle [:off :on])]

(do

(let [t (timeout 1000)

[v c] (alts! [t control])]

(cond

(and (= (first clock-state) :on)

(= c t))

(do

(om/transact! app :time minus-sec)

(recur clock-state))

(and (= (first clock-state) :off)

(= c control)

(= v :start))

(recur (next clock-state))

(and (= (first clock-state) :on)

(= c control)

(= v :stop))

(recur (next clock-state))

(and (= c control)

(= v :end))

(.log js/console "game over")

:else

(recur clock-state)))))))

Each time through the loop the function listens on the control channel and also sets a timeout for 1 second. If there is a message on the control channel, the clock state is adjusted and the loop repeats. If a timeout occurs and the clock is on, om/transact! is called to subtract a second from the cursor. If the timeout occurs when the clock is not on, then it repeats the loop without subtracting the second. If there is an :end message in the control channel, then the loop exits.

The UI for each clock is defined in the clock-view function. As an om component it gets its state from the cursor passed in as the first argument, but it can also have state passed in an option map by its parent function.

(defn clock-view [app owner]

(reify

om/IWillMount

(will-mount [_]

(let [input (om/get-state owner :input)

ctrl (counter app input)]))

om/IRenderState

(render-state [this {:keys [master]}]

(let [tag (:tag app)]

(dom/div #js {:className "clock"}

(dom/h3 nil (str "Player " (name tag)))

(dom/h3 #js {:className "clockface"} (time->string (:time app)))

(dom/button #js {:onClick

(fn [e] (put! master tag))} "Move"))))))

The local state for the clock view contains two channels. The input channel is used to by the counter function that controls the clock. In om the appropriate place for controls is the IWillMount protocol. The master channel is needed only at rendering time, where it is used in an event handler for the clock’s Move button.

Messages are put onto the control channel for the clocks by the switch-clock function.

(defn switch-clock [tag wc bc msgchan]

(go

(cond

(= tag :white)

(do

(>! wc :stop)

(>! bc :start)

(>! msgchan "Black to move"))

(= tag :black)

(do

(>! wc :start)

(>! bc :stop)

(>! msgchan "White to move"))

(= tag :end)

(do

(>! wc :end)

(>! bc :end)

(>! msgchan "Game over")))))

The possible tags are :white for the button on the white clock being pressed, :black for the button on the black clock and :end. The game can end when either the End Game button is pressed, or when either clock runs out of time.

The parent of the clock views is the board-view function. The board view creates tells the clock-view function where to draw itself and passes each clock its portion of the cursor and creates the component local state with the channels that each component needs for communication.

(defn board-view [app owner]

(reify

om/IInitState

(init-state [_]

{:white-control (chan)

:black-control (chan)

:message (chan)})

om/IWillMount

(will-mount [_]

(let [main (om/get-state owner :main-control)

message (om/get-state owner :message)

wc (om/get-state owner :white-control)

bc (om/get-state owner :black-control)]

(go (loop []

(let [tag (<! main)]

(switch-clock tag wc bc message)

(recur))))

(go (loop []

(let [msg (<! message)]

(om/update! app [:msg] msg)

(recur))))))

om/IRenderState

(render-state [this {:keys [white-control black-control main-control message]}]

(dom/div nil

(dom/h2 #js {:className "header"} "Chess Clocks")

(dom/h3 #js {:className "message"} (:msg app))

(dom/button #js {:onClick

(fn [e] (put! main-control :end))}

"End Game")

(dom/div #js {:className "clocks"}

(om/build clock-view (:white-clock app)

{:init-state {:master main-control

:input white-control}})

(om/build clock-view (:black-clock app)

{:init-state {:master main-control

:input black-control}}))))))

The control channels for the white clock and black clock are created in the IInitState implementation and will be passed to the individual clocks and to the switch clock function. switch-clock also needs a channel to write messages that will be displayed to the user.

The IWillMount function uses a fourth channel, :main-control that is passed in from the root function. The main-control channel is where messages are written when the buttons are clicked or when time runs out. The first go loop listens for messages on the main control channel and sends them to the switch-clock function. The second go loop listens for messages to be displayed to the user.

IRenderState gets a reference to each of the channels it needs. Whether they were created in IInitState or passed in the root function they are contained in the state map.

The main channel gets written to in the event handler for the End Game button. This channel is also passed to the clock-view function, because each clock view contains a button that writes to the channel.

The main-control channel is created outside of the board-view function, because in addition to the buttons on the UI, there is one other event that needs to write to it. When either clock runs out the game needs to end. This is accomplished using the :tx-listen option in the om/root function.

(om/root

board-view

app-state

(let [main (chan)]

{:target (. js/document (getElementById "app"))

:init-state {:main-control main}

:tx-listen (fn [update new-state]

(when (= (:new-value update) {:min 0 :sec 0})

(put! main :end)))}))

:tx-listen allows you to specify a function that will be called whenever the root app-state changes. The function takes two arguments. The first contains information about the update, with tags :path, :old-value :new-value, :old-state and :new-state. The second parameter is the cursor in its new state.

In this case, I check the new value in the update to see if the time has run out, so that I can end the game.

One problem I had that I want to call attention to is the difference between a map and a cursor. Within the render phase of a function, app is effectively a map, and the data elements can be accessed with the appropriate key. Outside of the render phase, the values inside of the cursors are available only after dereferencing the cursor.

Om's introductory tutorial explicitly calls attention to the fact that outside of the render phase you have to deref the cursor, but in an earlier version of my code I had a go loop, inside of a let, inside of a function, that was called by a component; and I had trouble keeping straight whether I was using a cursor or its data on any given line.

I did not play with it enough to give a detailed explanation, but hopefully just being aware of the issue can save you some debugging time.

Wednesday, April 9, 2014

Asynchronous Naiveté

This post has been heavily edited. Initially it was describing some behavior I didn't expect using core.async in both ClojureScript and Clojure. It turns out the behavior I observed in ClojureScript was a bug, that has now been fixed. The Clojure and ClojureScript versions can still behave differently than each other, depending on the lifetime of the main thread in Clojure. I have rewritten this to focus on that.

I created a buffered channel in ClojureScript, and tried to put 3 values into it:

(let [c (chan 1)] (go (.log js/console (<! c))) (go (>! c 1) (.log js/console "put one") (>! c 2) (.log js/console "put two") (>! c 3) (.log js/console "Put all my values on the channel")))

The result:

put one put two 1

Running the equivalent code in a Clojure REPL

(let [c (chan 1)]

(go

(println (<! c)))

(go

(>! c 1)

(println "put one")

(>! c 2)

(println "put two")

(>! c 3)

(println "put all of my values on the channel")))

Gives the same 3 lines printed out though which line has the number 1 can change from invocation to invocation.

put one 1 put two

When I put this in a -main method and ran it with lein run, I got no results at all.

Adding Thread/sleep the -main function causes the expected results to return. Though, again which order the 1 gets printed in can vary.

(defn -main [& args]

(let [c (chan 1)]

(go

(println (<! c)))

(go

(>! c 1)

(println "put one")

(>! c 2)

(println "put two")

(>! c 3)

(println "put all of my values on the channel"))

(Thread/sleep 1000)))

put one put two 1

Putting the main thread to sleep gives the go blocks time to execute. For confirmation of what is happening, we can check what thread each operation is happening on.

(defn -main [& args]

(let [c (chan 1)]

(go

(println (<! c))

(println (str "out " (.getId (Thread/currentThread)))))

(go

(>! c 1)

(println "put one")

(println (str "in1 " (.getId (Thread/currentThread))))

(>! c 2)

(println "put two")

(println (str "in2 " (.getId (Thread/currentThread))))

(>! c 3)

(println "put all of my values on the channel"))

(Thread/sleep 1000)

(println (str "main " (.getId (Thread/currentThread))))))

When I ran it I had the first go block on thread 10, the second on 11 and the main thread of execution on thread 1.

put one in1 11 put two in2 11 1 out 10 main 1

If we remove the Thread/sleep expression, but leave the calls to println, our output is:

main 1

This is just demo code. I don't know when in production systems your main thread would be likely to exit before asynchronous tasks are complete.

I do think it is interesting that the blocks of code I have run have executed in the same order every time in ClojureScript, but that the order can vary when running on the JVM. I do not know if that would be true of more complicated examples or on different browsers. I have done all of my tests with Google Chrome.

Friday, March 28, 2014

Simulated stock ticker with core.async

I created a simulated stock ticker in Clojure using core.async.

(ns ticker.core

(:require [clojure.core.async

:refer [chan ! timeout go]

:as async]))

In core.async functions in your program communicate over channels. There are several ways to put items into a channel and take them off, but in this project only uses >! and <!. These functions must be called within a 'go block'.

<! takes a value off of a channel when one is available. If no value is available, execution within the go block is suspended until a value becomes available, at which time execution resumes.

>! does just the opposite. It puts a value onto a channel when a channel can accept a value, and suspends if the channel is not ready to accept a value. An unbuffered channel can only accept a new value when another block is trying to take a value off of the channel. Channels can use buffers to accept values before readers are ready for them, but this project doesn't use them.

The other core.async function that I refer is timeout. timeout creates a channel that closes after a specified number of milliseconds. Reading from a closed channel immediately returns nil. As the name suggests, this can be used to implement timeouts, but it can also be used to create delays.

I have a couple of utility functions for adding some randomness to the price and timing of transactions. I also have a function that creates a map to represent each transaction.

(defn adjust-price [old-price]

(let [numerator (- (rand-int 30) 15)

adjustment (* numerator 0.01M)]

(+ old-price adjustment)))

(defn random-time [t]

(* t (+ 1 (rand-int 5))))

(defn new-transaction [symbol price]

{:symbol symbol

:time (java.util.Date.)

:price price})

The make-ticker function takes a stock symbol, a minimum number of milliseconds between transactions and a starting price. It returns a channel that will have a new transactions placed on it after a random interval as long as there is a listener to take the transactions off of the channel.

(defn make-ticker [symbol t start-price]

(let [c (chan)]

(go

(loop [price start-price]

(let [new-price (adjust-price price)]

(<! (timeout (random-time t)))

(>! c (new-transaction symbol new-price))

(recur new-price))))

c))

This function creates a channel, and then sets up an infinite loop that puts messages on to that channel. In terms of execution order what really happens is the channel is created and returned, a new price is calculated and the timeout is encountered. After the timeout has expired, a new value is put on the channel and we repeat the loop.

One thing that can get tricky with core.async is the lifetime of the channels. It is important to create the main channel 'c' outside of the loop because it needs to exist for the entire lifetime of the ticker. If it is created inside of the loop, each message will be on a new channel.

The timeout channel must be created inside of the loop. Each timeout is for a single use. If the timeout were created outside of the go loop, we would wait for the timeout channel to close during the first iteration. Later attempts to read the same channel would return immediately.

I created a collection of stocks symbols along with arbitrary values to use for the time interval and starting price.

(def stocks [ ;; symbol min-interval starting-price

["AAPL" 1400 537 ]

["AMZN" 4200 345]

["CSCO" 400 22]

["EBAY" 1200 55]

["GOOG" 8200 1127]

["IBM" 2200 192]

["MSFT" 500 40]

["ORCL" 1000 39]

["RHT" 10200 53]

["T" 600 35]])

Each stock symbol will have its transactions created on its own channel. For the ticker, we want to create a single channel that combines the outputs of each stock's channel. the merge function does exactly that, it takes several channels as inputs, and combines their outputs into a single channel.

(defn run-sim []

(let [ticker (async/merge

(map #(apply make-ticker %) stocks))]

(go

(loop [x 0]

(when (< x 1000)

(do (println (str x "-" (<! ticker)))

(recur (inc x))))))))

This function creates channels for each of the stock symbols and combines their outputs into a channel called ticker. It then creates a loop within a go block that will run until 1000 transactions have been printed out.

I chose to call the merge function with async/merge instead of bringing merge in with :refer. The other core.async functions have names that make it clear that they pertain to core.async. Merge is a name that could apply to lots of different things, so I wanted to be explicit.

Friday, March 14, 2014

Ohms Law Calculator in Om

Reading David Nolen's tweets and blog posts about his new ClojureScript UI library, Om got me excited to try it out. If you haven't read about it yet, The Future of JavaScript MVC Frameworks is a good place to start.

David has also written a couple of tutorials. I was working through the introductory tutorial. I stopped short of the section on "Higher Order Components" to see if I could I apply what I had learned to that point.

I created a very simple Ohm's Law calculator. I borrowed liberally from David's code but there are enough differences that a comparison may be informative. I have put the code out on github.

I hope this is a useful supplement to David's tutorial, but it may make little sense on its own.

With Om you build your user interface in components. Each component is defined with om/root. root takes a component constructor function, an associative data structure that contains application data, and a map of options for the rendering functions, one of which must be the target dom element to be rendered.

(def app-state (atom {:result ""}))

(om/root

calculator-view

app-state

{:target (. js/document (getElementById "app"))})

The function passed to om/root takes as arguments application state and what the documentation calls the "owning pure node" and returns a reified object that must implement either IRender or IRenderState, and may implement other life cycle protocols. You will see second parameter is called 'owner' which is created by Om. It is this owner that contains component local state, as you will find in the entry-pane function later on.

Components built with om/root may be composed of smaller components. These subcomponents are created with the om/build function. Like root, build takes a two argument function that returns an object that implements either IRender or IRenderState and optionally other protocols. Build also needs to be passed in the state the component requires, and may be passed a map of options.

In David's contact list example, the display for each contact was rendered by its own subcomponent. The state for each was a map containing details for a single contact. He constructed these components with om/build-all, passing it his constructor function and a vector of contacts. om/build-all maps om/build over the vector of individual contacts.

In my example, I am constructing two subcomponents, neither of which display a sequence of data, so I call om/build twice.

(defn calculator-view [app owner]

(reify

om/IRender

(render [this]

(dom/div nil

(om/build entry-pane app)

(om/build result-pane app)))))

(defn result-pane [app owner]

(reify

om/IRender

(render [this]

(dom/div #js {:className "result-pane"}

(dom/label #js {:className "result"} (:result app))))))

In David's example, his components both implemented IRenderState. That was because his contact-view created a core.async channel that was placed in each component's state to allow for delete messages to be sent from the child components to the parent. My calculator-view and result-pane share nothing except the app-state which is available in IRender.

My entry-pane function does rely on component state. I copied David's implementation of handling button clicks with a channel and the keyboard events with an anonymous function that calls a handle-change function. The channel and the values of the text box are all state that is needed only within the component.

(defn entry-pane [app owner]

(reify

om/IInitState

(init-state [_]

{:calculate (chan)

:ohms ""

:amps ""

:volts ""})

om/IWillMount

(will-mount [_]

(let [calculate (om/get-state owner :calculate)]

(go (loop []

(let [inputs (<! calculate)]

(om/transact! app

(fn [xs]

(assoc xs :result (do-calc inputs))))

(recur))))))

om/IRenderState

(render-state [this state]

(dom/div #js {:className "entry-pane"}

(dom/h3 nil "Ohm's Law Calculator")

(dom/label nil "Resistance")

(dom/input #js {:type "text" :ref "ohms"

:value (:ohms state)

:onChange #(handle-change % owner :ohms state)})

(dom/label nil "Current")

(dom/input #js {:type "text" :ref "amps"

:value (:amps state)

:onChange #(handle-change % owner :amps state)})

(dom/label nil "Voltage")

(dom/input #js {:type "text" :ref "volts"

:value (:volts state)

:onChange #(handle-change % owner :volts state)})

(dom/button #js {:className "button"

:onClick

(fn [e] (put! (:calculate state) state))}

"Calculate")))))

When the user clicks the calculate button the click handler puts the state map onto the calculate channel. The go block in the IWillMount implementation takes the state map from the channel and passes it to the calculate function which returns a string. The :result is set in the app state using the om/transact!. This mutates the state and triggers a re-render.

The handle-change function calls om/set-state! to update the local state with the data entered by the user. set-state! mutates the data and triggers a re-render just like transact! does. The difference is set-state! operates on state contained within a component.

(defn handle-change [e owner key state]

(let [value (.. e -target -value)

text (key state)

allowed (set (seq (str (range 10))))]

(if (every? allowed (seq value))

(om/set-state! owner key value)

(om/set-state! owner key text))))

The concepts in Om are still new to me. I have explained my rationale for the code I have written. If you are aware of any mistakes, please leave a comment letting me know where I have gone wrong.

Saturday, March 8, 2014

ML Class Notes: Lesson 1 - Introduction

I am taking the Machine Learning class at Coursera. These are my notes on the material presented by Professor Ng.

The first lesson introduces a number of concepts in machine learning. There is no code to show until the first algorithm is introduced in the next lesson.

Machine learning grew out of AI research. It is a field of study that gives computers the ability to learn algorithms and processes that can not be explicitly programmed. Computers could be programmed to do simple things, but doing more complicated things required the computer learn itself. A well posed learning program is said to learn some task if its performance improves with experience.

Machine Learning is used for a lot of things including data mining in business, biology and engineering; performing tasks that can't be programmed by hand like piloting helicopters or computer vision; self-customizing programs like product recommendations; and as a model to try to understand human learning.

Two of the more common categories of machine learning algorithms are supervised and unsupervised learning. Other categories include reinforcement learning and recommender systems, but they were not described in this lesson.

Supervised Learning

In supervised learning the computer taught to make predictions using a set of examples where the historical result is already known. One type of supervised learning tasks is regression where the predicted value is in a continuous range (the example given was predicting home prices). Other supervised learning algorithms perform classification where examples are sorted into two or more buckets (the examples given were of email, which can be spam or not spam; and tumor diagnosis which could be malignant or benign.)

Unsupervised Learning

In unsupervised learning, the computer must teach itself to perform a task because the "correct" answer is not known. A common supervised learning task is clustering. Clustering is used to group data points into different categories based on their similarity to each other. Professor Ng gave the the example of Google News, which groups related news articles, allowing you to select accounts of the same event from different news sources.The unsupervised learning discussion ended with a demonstration of an algorithm that had been used to solve the "cocktail party problem", where two people were speaking at the same time in the same room, and were recorded by two microphones in different parts of the room. The clustering algorithm was used to determine which sound signals were from each speaker. In the initial recordings, both speakers could be heard on both microphones. In the sound files produced by the learning algorithm, each output has the sound from one speaker, with the other speaker almost entirely absent.

Sunday, February 23, 2014

Take the Machine Learning Class at Coursera

Coursera is offering its Machine Learning course again, beginning March 8, and I highly recommend it. You already know the obvious, that it is a course on an incredibly timely career skill and it is free, but until you take the course you can't know just how good the course really is.

You will learn how to write algorithms to perform linear regression, logistic regression, neural networks, clustering and dimensionality reduction. Throughout the course Professor Ng explains the techniques that are used to prepare data for analysis, why particular techniques are used, and how to determine which techniques are most useful for a particular problem.

In addition to the explanation of what and why, there is an equal amount of explaining how. The 'how' is math, specifically linear algebra. From the first week to the last, Ng clearly explains the mathematical techniques and equations that apply to each problem, how the equations are represented with linear algebra, and how to implement each calculation in Octave or Matlab.

The course has homework. Each week, there is a zip file that contains a number of incomplete matlab files that provide the structure for the problem to be solved, and you need to implement the techniques from the week's lessons. Each assignment includes a submission script that is run from the command line. You submit your solution, and it either congratulates you for getting the right answer, or informs you if your solution was incorrect.

It is possible to view all of the lectures without signing up for the class. Don't do that. Sign up for the class. Actually signing up for the class gives you a schedule to keep to. It also allows you to get your homework checked. When you watch the lectures, you will think you understand the material; until you have done the homework you really don't. As good as the teaching is, the material is still rigorous enough that it will be hard to complete if you are not trying to keep to a schedule. Also, if you complete the course successfully, you will be able to put it on your resume and LinkedIn profile.

You have the time. When I took the class, there was extra time built in to the schedule to allow people who started the course late to stay on pace. Even if you fall behind, the penalty for late submission is low enough that it is possible to complete every assignment late and still get a passing grade in the course.

I am going to take the course again. I want to make review the material. I also want to try to implement the homework solutions in Clojure, in addition to Octave. I will be posting regularly about my progress.

You may also be able to find a study group in your area. I decided to retake the course when I found out that there was going to be a meetup group in my area. Even without a local group, the discussion forums are a great source of help throughout the class. The teaching assistants and your classmates provide a lot of guidance when you need it.