Several months ago I recommended the Machine Learning course from Coursera. At the time, I intended to retake the course and try to implement the solutions to the homework in Clojure. Unfortunately, I got involved in some other things, and wasn’t able to spend time on the class.

Recently, a new book has come out, Clojure for Machine Learning. I am only a couple of chapters in, but it has already been a good help to me. I do agree with this review that the book is neither a good first Clojure book, or a good first machine learning resource, but it does join the two topics well.

Linear Regression

The place to start with machine learning is Linear Regression with one variable. The goal is to come up with an equation in the familiar form of y = mx + b, where x is the value you know and y is the value you are trying to predict.

Linear regression is a supervised learning technique. This means that for each of the examples used to create the model the correct answer is known.

We will use slightly different notation to represent the function we are trying to find. In place of b we will put Theta[0] and in place of m we will put Theta[1]. The reason for this, is that we are going to be using a generalized technique that will work for any number of variables, and the result of our model will be a vector called Theta.

Even though our technique will work for multiple variables, we will focus on predicting based on a single variable. This is conceptually a little simpler, but more importantly it allows us to plot the input data and our results, so we can see what we are doing.

The Question

A number of years ago I read the book Moneyball, which is about the application of statistics to baseball. One of the claims in the book is that the best predictor for the number of games a baseball team wins in a season is the number of runs they score that season. To improve their results, teams should focus on strategies that maximize runs.

The question I want to answer is whether the same is true in soccer: Are the number of points a team earns in a season correlated with the number of goals that they score. For any that don’t know, a soccer team is awarded 3 points for a win and 1 point for a tie.

The importance of goals is a relevant question for a Manchester United fan. At the end of the 2012-13 season, head coach Sir Alex Ferguson retired after winning his 13th Premier League title. He was replaced by David Moyes. Under Moyes the offense which had been so potent the year before looked clumsy. Also, the team seemed unlucky, giving up goals late in games, turning wins into draws and draws into defeats. The team that finished 1st the year before finished 7th in 2013-14. Was the problem a bad strategy, or bad luck?

The Data

I have downloaded the league tables for the last 19 years of the English Premier League from stato.com. There have actually been 22 seasons in the Premier League, but in the first 3 seasons each team played 42 games, vs 38 games for the last 19 seasons, and I opted for consistency over quantity.

I actually want to run 3 regressions, first one on a case where I am sure there is a correlation, then on a case where I am sure there is not, and then finally to determine whether a correlation exists between goals and points.

There should be a high correlation between the number of wins a team has and their number of points. Since every team plays the same number of games, there should be no correlation between the number of games played and a teams position in the standings.

The Process

We will use a technique called gradient descent to find the equation we want to use for our predictions. We will start with an arbitrary value for Theta[0] and Theta[1]; setting both to 0. We will multiply each x value by Theta[1] and add Theta[0], and compare that result to the corresponding value of Y. We will use the differences between Y and the results of Theata * X to calculate new values for Theta, and repeat the process.

One way of measuring the quality of the prediction is with a cost function that measures the mean square error of the predictions.

1/2m * sum(h(x[i]) - y[i])^2

Where m is the number of test cases we are evaluating, and h(x[i]) is the predicted value for a test case i. We will not use the cost function directly, but its derivative is used in improving our predictions of Theta as follows:

Theta[0] = Theta[0] - alpha * 1/m * sum(h(x[i]) - y([i])

Theta[1] = Theta[1] - alpha * 1/m * sum((h(x[i]) - y([i]) * x[i])

We have added one more symbol here. alpha is called the learning rate. The learning rate determines how much we modify Theta each iteration. If alpha is set too high, the process will oscillate between Thetas that are too low and two high and the process will never converge. When alpha is set lower than necessary, extra iterations are necessary to converge.

I need to mention again that this methodology and these equations come directly from Professor Ng’s machine learning course on Coursera that I linked above. He spends over an hour on linear regression with one variable, and if you want more information that is the place to go.

The Code

The actual calculations we are going to do are operations on matrices. When we multiply the matrix X by the matrix Theta, we obtain a matrix of predictions that can be compared element by element with the matrix Y. The same results could be obtained by looping over each test case, but expressing the computations as matrix operations yields simpler equations, shorter code and better performance.

I used the clatrix matrix library for the calculations.

One other thing to note, in the equations above, Theta[0] is treated differently than Theta[1], it is not multiplied by any x terms, either in the predictions or in the adjustments after the predictions. If we add an additional column to our X matrix, an X[0], and make all of the values in this column 1, we then no longer have to make a distinction between Theta[0] and Theta[1].

(defn add-ones "Add an X[0] column of all 1's to use with Theta[0]"

[x]

(let [width (first (cl/size x))

new-row (vec (repeat width 1))

new-mat (cl/matrix new-row)]

(cl/hstack new-mat x)))

(defn linear-regression [x Y a i]

(let [m (first (cl/size Y))

X (add-ones x)]

(loop [Theta (cl/zeros 1 (second (cl/size X))) i i]

(if (zero? i)

Theta

(let [ans (cl/* X (cl/t Theta))

diffs (cl/- ans Y)

dx (cl/* (cl/t diffs) X)

adjust-x (cl/* dx (/ a m))]

(recur (cl/- Theta adjust-x)

(dec i)))))))

The linear-regression function takes as parameters the X and Y values that we use for training, the learning rate and the number of iterations to perform. We add a column of ones to the passed in X values. We initialize the Theta vector, setting all the values to 0.

At this point X is a matrix of 380 rows and 2 columns. Theta is a matrix of 1 row and 2 columns. If we take the transpose of Theta (turn the rows into columns, and columns into rows) we get a new matrix, Theta’ which has 2 rows and 1 columns. Multiplying the matrix X with Theta’ yields a matrix of 380x1 containing all of the predictions, and the same size as Y.

Taking the difference between the calculated answers and our known values yields a 380x1 matrix. We transpose this matrix, making it 1x380, and multiply it by our 380x2 X matrix, yielding a 1x2 matrix. We multiply each element in this matrix by a and divide by m, ending up with a 1x2 matrix which has the amounts we want to subtract from Theta, which is also a 1x2 matrix. All that is left to do is recur with the new values for Theta.

The Results

Since I am going to be performing the same operations on three different data sets, I wrote a couple of helper functions. plot-it uses Incanter to display a scatter plot of the data. reg-epl calls the linear-regression function specifying a learning rate of .0001 and 1000000 iterations. I also have a get-matrices function, which downloads the data and creates the X and Y matrices for the specified fields.

(def wins (get-matrices [:win] :pts))

(plot-it wins)

(def win-theta (reg-epl wins))

(println "Wins-points: " win-theta)

Yields this graph

and these results

Wins-points: A 1x2 matrix

-------------

1.24e+01 2.82e+00

The relationship between wins and points is obvious in the graph. The equation we developed estimates wins as being worth 2.82 points, instead of the correct 3. This is because it had no way to account for draws, and use a high intercept to get those extra points in there.

A team with 0 wins would be expected to have 12.4 points. A team with 10 wins would have 12.4 + 2.82 * 10 = 40.6 points. A team with 20 wins would have 12.4 + 2.82 * 25 =

82.9 points.

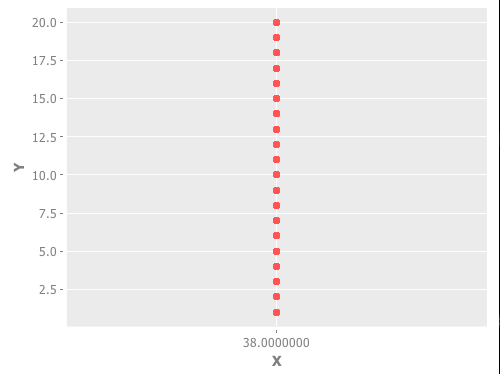

(def played (get-matrices [:played] :rank))

(plot-it played)

(def played-theta (reg-epl played))

(println "played-rank: " played-theta)

(println "expected finish:" (+ (first played-theta)

(* 38 (second played-theta))))

Playing 38 games gives you an equal chance of having a finishing position anywhere between 1 and 20. The graph gives a good illustration of what no-correlation looks like.

If we use the terms in Theta to find the expected finishing position for a team playing 38 games, we find exactly what we expect, 10.5.

played-rank: A 1x2 matrix

-------------

7.27e-03 2.76e-01

expected finish: 10.499999999999996

Ok, now that we have seen what it looks like when we have a strong correlation, and no correlation, is there a correlation between goals and points?

(def goals (get-matrices [:for] :pts))

(plot-it goals)

(def goal-theta (reg-epl goals))

(def goal-lm (find-lm goals))

(println "goals-points: " goal-theta)

(println "goals-points (incanter): " goal-lm)

Looking at the graph, while not quite as sharp as the goals-points graph, it definitely looks like scoring more goals earns you more points.

To double check my function, I also used Incanter’s linear-model function to also generate an intercept and slope. (And yes, I am relieved that they match).

goals-points: A 1x2 matrix

-------------

2.73e+00 9.81e-01

goals-points (incanter): [2.7320304686089685 0.9806635460888629]

We can superimpose the line from our regression formula on the graph, to see how they fit together.

(def goal-plot (scatter-plot (first goals) (second goals)))

(defn plot-fn [x]

(+ (* (second goal-theta) x) (first goal-theta)))

(def plot-with-regression (add-function goal-plot plot-fn 0 100))

(view plot-with-regression)

The Answer

We can calculate how many points we would expect the team to earn based on their 86 goals in 2012-13 and 64 goals in 2013-14.

(println "86 goals = " (+ (first goal-theta)

(* (second goal-theta) 86)))

(println "64 goals = " (+ (first goal-theta)

(* (second goal-theta) 64)))

86 goals = 87.07011197597255

64 goals = 65.49481001604704

In the last year under Sir Alex, Manchester United earned 89 points, 2 more than the formula predicts. In their year under David Moyes, they earned 64 points, 1.5 less than the formula predicts.

Of the 25 point decline in Manchester United’s results, 21.5 points can be attributed to the failure of the offense under Moyes, and 3.5 points can be attributed to bad luck or other factors.

Manchester United’s attacking style isn’t just fun to watch, it is also the reason they win so much. Hopefully the team’s owners have learned that lesson, and will stick to attack minded managers in the future.

You can find all of the code for the project on github.

No comments:

Post a Comment